The Bull Case for Software, ASML, Credo, Copper vs Optics

This week's findings

The Bull Case for Software

We’re taking a contrarian view here and actually see AI as bringing in more opportunity than risk for the quality software names—quality being defined as software that either has high switching costs and/or large amounts of required user training. Jensen sees a similar scenario playing out, his comments from this week:

“In our case, chip design and system engineering, we partnered with Synopsys, Cadence, Siemens and Dassault, so that we could insert our technology and whatever they need, so that we could revolutionize the tools which we use to design what we do. We use Synopsys everywhere, Cadence, Siemens and Dassault, and I will make sure that they have 100% or whatever they want so that I have the tools necessary to create the next generation.”

So, Jensen’s key aim is for AI to boost productivity of his engineers with as much as possible. By taking over more workloads, AI in combination with EDA software can boost productivity multifold. The bull case for the EDA names, such as Synopsys and Cadence, is to monetize part of this new economic value added. We see Jensen’s key goal here being as not to save 1 point of margin by trying to rip out and replace complicated software systems, with questionable odds of success, but instead to multiply the productivity of his workforce by as much as possible.

We think this is true for a lot of good software tools. For example, in Excel, we see the most likely scenario being that analysts will use spreadsheets with AI built-in. So, Claude will first build a complicated spreadsheet and then analysts tweak parameters or certain formulas to get the final output that they want. This is also how we’re currently writing code for our software projects. Our usage of IDE software tools hasn’t decreased, it has increased. Why? Because with the help of AI it has become much easier to write large amounts of code, and we can modify it inside an IDE to finally deploy it. Thus, we’re writing more code than ever before and our IDE usage has increased as a result.

This also gives us good insights into the quality of the code Claude is actually writing. And we think this is where a lot of confusion is coming from. For people who don’t understand code, it will seem magical. I give Claude a prompt, it writes lines of code, I test it on my computer, and it works. “You can go to localhost right now to check out my new app”, we’ve seen written on Reddit. So, we can see why some are jumping to the conclusion that “SaaS is toast as I can easily write my own SaaS now.”

Well, there’s a big difference between code that’s good enough to run on your local machine, and code that you can actually ship to run crucial enterprise workloads on. Production quality code has to fulfill all three of the following criteria: it has to be secure, it has to be scalable, and it has to be cost efficient. Currently, the code that AI is writing fulfills none of those three. Zero. In simple terms, it writes a lot of useful code but it also does a lot of stupid stuff. And we’re not the only ones saying this, Google has similar results. This is from the recent call:

“About 50% of our code is written by agents, which are then reviewed by our own engineers. But certainly, it helps our engineers do more and move faster with the current footprint.”

In other words, heavy human oversight is needed for production grade code. Also Andrey Karpathy, who coined ‘vibe coding’, points out that oversight and scrutiny remain crucial:

“Today (1 year later), programming via LLM agents is increasingly becoming a default workflow for professionals, except with more oversight and scrutiny. The goal is to claim the leverage from the use of agents but without any compromise on the quality of the software.”

Yes, AI massively boosts coding productivity. But, at the same time it does a lot of stupid stuff. So spinning up tons of AI agents to write a huge code base that an enterprise can safely deploy for a crucial workload, e.g. to run my warehouse management on, we don’t see this happening any time soon. Remember, an ERP system has 10 to 100 million lines of code, no enterprise is going to deploy a system that hasn’t been fully vetted and battle-tested. If your ERP suddenly crashes, this is an existential risk even for a giant like Walmart. It can take the whole company down.

The other problem is that this AI agent approach remains hugely expensive on large code bases. We have the most expensive Claude plan (£100 per month or so), and when we give Claude a large code base, we burn through our credits in a few prompts. So, Anthropic then notifies us that we have to take a timeout and can start coding again in 5 hours. Obviously, this isn’t great. For a large code base, we find it much easier to give Claude a narrow set of code, e.g. a few example functions and some context, and then explain the code it needs to write next. This way, Claude can write huge amounts of code without burning through all our credits.

So, are enterprises replacing best-in-class software with internally developed tools? While there will be some instances where this happens, we think that this will be fairly limited. For example, let’s say I want to replace my supply chain management software with an internal, more minimalistic tool. I would still have to hire a team of engineers to build and maintain it. Those engineers aren’t cheap, plus if they leave, it might be hard to find replacements who can understand and update that code base. So, we think it still makes much more sense to purchase a battle-tested and secure software off the shelf, that corporations know they can scale and that they can trust to run their operations on.

And, it’s not like cheap software hasn’t been available already. Free open source software has been available for decades, yet, they haven’t been able to disrupt the growth story for the best-in-class software names. A key reason is that even if you deploy an open source software, you need to hire an engineering team to manage it. For example, in databases, there are plenty of free database tools available. However, if you want to deploy it, you need skilled engineers who know how to spin up a cluster in AWS for example, deploy the open source database on this cluster, manage the backups, software updates, patches etc. And you have the risk that if these engineers leave, it could be hard to find replacements. Spinning up and managing an open source Cassandra cluster for example is fairly complicated, and we say that with a good amount of cloud engineering experience.

So, this is why a lot of enterprises choose a managed database. You simply connect to an API in the cloud and then MongoDB, or Google Cloud Platform etc. will manage the database for you. By purchasing a software, you not only get access to the software, but also to the entire support network of the software provider. So, if my system suddenly crashes, you actually can call people who know how to fix it. If you don’t have this, you could get wiped out. We saw this a few years ago when CrowdStrike crashed, loads of businesses went down. Imagine an internal core system that suddenly crashes and the key engineer who understands it is on holidays, it could take your business down completely.

So, is MongoDB going to get disrupted here? We’re really not seeing this, as free databases such as Cassandra, Postgres, and the open-source version of MongoDB itself have been available for decades. Enterprises go for the leading software products because they know these have been battle tested, i.e. they can scale to large volumes, they know that these are secure, and that there is a wide support network available to fix your systems.

At the same time, trying to replace one of these systems is actually a big risk for enterprises as well. The last decades have seen plenty of quarterly calls with sudden earnings warnings due to struggles in a new software rollout. And there have been instances where companies simply gave up on the rollout of their ERP, supply chain management, or database system. Remember, these are often 2-4 year projects. So, the assumption the market is making here that enterprises are going to try to move away from their ERP, supply chain, databasing, data analytics, finance, CAD, etc. tools is rather naive. Ripping out one of these is a 2-4 year project with not guaranteed success, so mathematically, there’s not going to be much disruption for the foreseeable future.

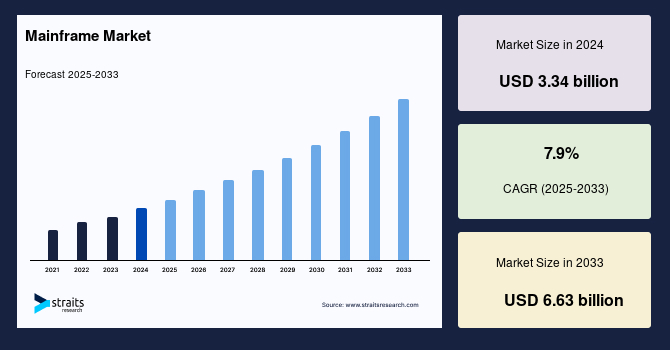

Looking at the history of software, software is extremely resilient. Take mainframes for example—a 1960s tech which got disrupted in terms of new demand a long time ago—it’s actually still alive and growing:

Similarly, Excel and Windows later on had to compete with much more modern systems and even free open source tools. Both remain in the core stack of many enterprises today. Despite the rise of much better and more modern programming languages in the last few decades, tons of code today is still being written in 1970s C. Python, developed in the early ‘90s, remains the dominant programming language today even in AI coding. Python has a lot of flaws—it’s not even a static programming language and it’s extremely slow. However, once a software tool really becomes dominant in a certain niche, history suggests it’s extremely hard to replace.

The ‘Lindy effect’ suggests that the future life expectancy of non-perishable items—like ideas, technologies, or books—is proportional to their current age as their resilience and robustness increases with time. So—C, Windows, and mainframes are actually much more likely to still being used 20 years from now than a hot and new app such as Claude Code. It’s even possible that two years from now no one will be using Claude Code as Gemini Antigravity or Grok might become absolutely dominant.

At the same time, it’s also not the case that enterprises have an abundance of deep engineering talent that know how to build AI workflows themselves. As most companies want to unlock the productivity gains from AI, the only way to do this in our view is to integrate AI into their core tools that run the backbone of the business. And this means revenue acceleration for those software names as those AI workflows can be monetized. Accounting software provider Xero illustrated this on their call:

“First, we’re using AI agents to automate actions and workflows across accounting, payments and payroll, so we can help our customers and give them time back. Think about automating bank reconciliations, or automatically closing books. And we’re also building agents that provide actionable insights, which helps our customers run their business better. Imagine being able to run detailed scenarios for your cash flow and test out different assumptions without ever having to use Excel. Additionally, SaaS apps no longer need to be unintelligent. They can intelligently surface to you what you need to pay the most attention to. Finally, accounting and payments are not areas that you can have errors in. People need to be able to trust their financial management systems to reconcile transactions, close books and calculate your tax. This is crucial information which LLMs do not have, and it gives us a strong advantage.

Through FY ‘26, AI has become ubiquitous across our platform because of the value it can deliver for our customers. We are prioritizing using AI to automate the key jobs that 90% of our users use and that are the most time consuming, like automated bank reconciliation, AI invoicing and document ingestion. This means that our AI creates maximum value for them. We designed our AI for a future where agents complete tasks end-to-end in the background and SaaS applications become the space where humans oversee their agents and get the insights that matter. Previously, our homepage was a to-do list of tasks for humans. Now it’s a control room for AI agents and insights, showing users what AI has already done.”

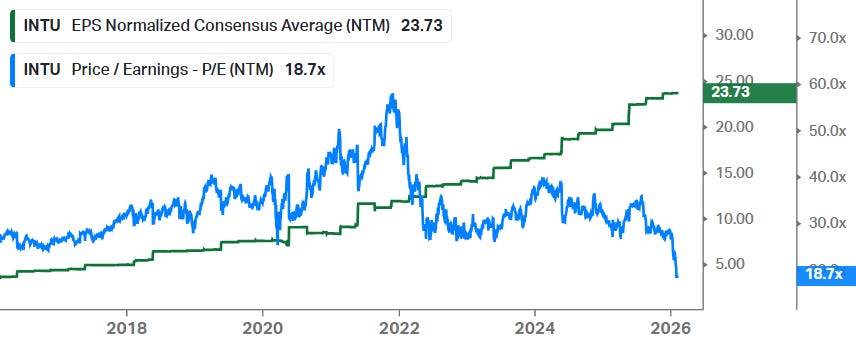

We think that the amount of SMBs that are going to turn off their core accounting software is going to be rather limited. Are you really going to invest time in a difficult accounting software transition, or are you simply going to deploy AI in your existing accounting software to automate your workflows? We suspect that 90+% will do the latter, which means substantial upside for the accounting software names. So, we think that the best-in-class accounting software names are heavily oversold here. Intuit’s EPS are still trending up—and these will very likely continue to trend up, we think it will actually accelerate—and at some point in the coming years the market will start figuring out that this is actually a great business and then we’ll see a rerating:

Vitec made similar comments on their call—yes, customers want the benefits of AI, but they also want their software not to change too much:

“They really want to hear that we’re working on it and we have it in the pipeline, but a lot of them are super conservative and say, yes, we want all of that, but please don’t change anything. They are really reluctant to do any big bang changes because, and this is one of the moats around vertical market software, is that it is so embedded into the customers’ processes. Yes, they want to benefit, but they don’t want to change anything at the same time. So, you will have to do this very gradually. The important thing is that they feel that they can benefit from these improvements over time.”

In our personal workflows, at this stage we can’t think of any tool that we’re going to shut down. We still need to tweak spreadsheets in Excel. Typing in precise prompts is also time consuming, it’s often much faster to tweak the spreadsheet just yourself. So, we use AI when we need to write 100-800 lines of code and then it makes sense to invest the time to write a precise prompt. However, you don’t want to have to state a prompt for every tweak you need to do in your spreadsheet, as doing it manually is faster, and much more fun

While AI is superb at generating images, we’re even keeping our Canva subscription. Writing prompts and waiting for a good image to be generated is slow. It’s much faster to just quickly get a great image already available in Canva and do some quick editing inside Canva if needed. A business like Canva is one of the most at risk from AI, however, even here you can see a case why it will likely still retain a large amount of users. Similarly, for Adobe, a free and cool video editing software from ByteDance called CapCut has been available for free for quite some time now, however, all the gifted video editors we know swear by Adobe Premiere Pro. We’re not saying that Adobe isn’t at risk, however, we do think they will retain a large amount of users.

Overall, we’re making more use of our tools than ever before as now our AIs are using them as well. For example, for our financial data terminal, we’re sending ever more requests to the API as AI is now analyzing loads of data to come up with investment ideas. So, Grok actually initially told us to take a look at this deep tech disruptor, and we subsequently reviewed it and then invested in it:

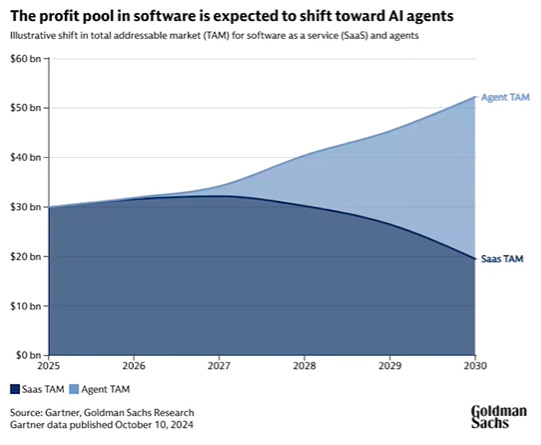

Gartner makes a similar conclusion, with a likely shift towards agent monetization based on usage versus pure SaaS subscriptions:

“Look, Claude Code can write code, software is going to zero” is a pretty extreme conclusion in our view. It doesn’t address the fact that free open-source software has been available for decades, which illustrates that it’s not purely about price why enterprises implement a certain software. Reliability, security and networking effects around software are three crucial factors. At the same time, ripping out a single core software system is often a 2-4 year project involving considerable business risk.

Enterprises spend single digit percentages of revenues on software versus 5x that amount on personnel. It makes much more sense to optimize and boost the productivity of your workforce rather than doing a set of highly risky software transitions with the aim of saving a few points on margin. It makes much more sense currently for an enterprise to invest its time into inserting AI into the business and automate as much workflows as possible. This is where you can really get the cost savings, or alternatively, to grow your top line on the same cost base. This is clearly also the strategy that Jensen is going for.

We suspect that current market action is being driven by a lot of trading flow aimed at ‘cutting losses’ and also by generalists, or quant funds, who have limited knowledge compared to the software specialists. JP Morgan makes a similar conclusion:

“In our view, generalist money flows responding to the rapid AI product rate-of-change dynamic are overwhelming the deeper-thinking fundamental software sector specialists who are slightly more grounded in the principles that create stickiness for Enterprise software businesses. These generalist money flows are invoking more knee-jerk selling, which is being exacerbated by index arbitrage basket selling, programmatic de-grossing, cross-correlation factor contagion, and a passive flow liquidity vacuum.

Two to three years ago, our investor call series with Boston Consulting Group (BCG) highlighted the evolution of the software stack, with the emergence of a data layer (Databricks, Snowflake, etc.) creating a foundation for AI Agents to handle some portion of what had previously been handled by core systems of record. It feels like an illogical leap to extrapolate Claude Cowork Plugins, or any similar personal productivity tools, to an expectation that every company will hereby write and maintain a bespoke product to replace every layer of mission-critical enterprise software they have every deployed.

The current state of investor psychology has started to feel like the inverse of the 2021 software euphoria period, in which the same institutions that are currently selling software stocks at 25-30 year valuation lows were buying Software as fast as they could when the group was at a 20-year valuation high, fully convinced that Cloud was the world’s greatest business model and interest rates would remain at zero forever (and kindly recall our differentiated negative stance during this period). 2020-2021 may have been an equally surprising period of time in terms of mass investor psychology shifting radically into a very unrealistic mindset.”

However, we do see one bear case scenario. The most credible bear case in our view is if AI is able to take humans out of the loop entirely. Overall, we would define such a scenario as being ‘AGI’. This would be a scenario where Jensen can fire all his engineers and a bunch of AIs are running Nvidia and reporting to Jensen or to an uber AI. Part of the reasoning above is based on the view that you need humans and AI agents working alongside, and so both need a common set of tools. If at some stage, the AI becomes so smart that it can manage to take humans out of the loop completely, we think that the AI will indeed simply write its own tooling.

Personally, we’re skeptical that this level of intelligence can be achieved with the current transformer architecture. Although we’re not excluding that with further advancements in AI this scenario could be possible 10 years from now or so. Therefore, we continue to view portfolio diversification as important. This is a key reason why we cover a wide variety of tech topics in this newsletter ranging from semis, software, biotech tools, space companies, industrials, robotics, online apps, fintech, adtech, etc. That being said, if AGI arrives and AI is running the entire economy, or large parts of it, there is no reason anymore for a capitalistic economy anyways. A socialist system will be the easiest way for everyone to share fairly in the abundance that AI creates. So, potentially, portfolio diversification won’t matter in that scenario anyways as everything will get nationalized.

Coming back to the current reality, we see the risk-reward as still being attractive in software, and so we’re comfortable to run a 15-20% portfolio weighting in this space as we think that revenue and EPS growth will accelerate from here and that the market is massively underestimating just how resilient various types of software have been over the last 60 years. Software is more like geology, where one layer is built on top of the next. And we think that the next logical step is to build AI on top of proven and reliable systems that also humans still know how to operate.

Next, we will dive deeper into AI and semis in particular where Credo got hammered due to the copper vs optics debate. We’ll give our top semi picks in the AI data center space and our views on ASML after a strong and deserved rally, and what we’re doing with our shares now. We’ll also overview our buy-rated list and our top picks as we’ve been going through most earnings calls so far and we’re making a few trades here, including taking profits in some winners.