The Cloud Explosion & Stock Picks in the AI Value Chain

AMZN + more AI winners and losers

Amazon’s Acceleration

A name that had been lagging in the AI value chain is major cloud provider Amazon. One key reason is that AWS has been heavily focused on building their own ASICs – Graviton as their custom CPU and Trainium as their accelerator – which resulted into AWS receiving less allocation of Nvidia GPUs. Over the past few years, Nvidia made sure that partners who are fully committed to its ecosystem, such as the neoclouds, had an ample supply of GPUs. This resulted in Microsoft interconnecting its Azure data centers with those of CoreWeave and offload GPU traffic in the back.

Another reason why Amazon has been lagging is that key competitor Microsoft was able to offer OpenAI’s leading models in its cloud. However, this is now changing with the recent deal between OpenAI and AWS, where OpenAI’s lesser advanced models can be deployed on AWS. These could still be massive markets as for a lot of workloads you don’t need the most advanced models anyways. So, headwinds for AWS are now alleviating as Deutsche highlights:

“The agreement is for $38bn dollars and “OpenAI will immediately start utilizing AWS compute as part of this partnership, with all capacity targeted to be deployed before the end of 2026, and the ability to expand further into 2027 and beyond.” Thus, we assume that $38bn is likely to be deployed mostly in 25/26, with an expectation that this partnership can grow meaningfully from there and thus Street estimates of only $26bn of incremental AWS revenue in 2026 are likely biased higher. Additionally, $38bn represents ~20 points of growth on top of AWS’s backlog of ~$200bn as of the 3Q. Furthermore, following CEO Andy Jassey’s comments from this quarter’s call about “not [being] constrained in any way in buying NVIDIA” suggests that the AWS/NVIDIA partnership is on much more stable footing than many thought just five days ago.

All in, while a deal like this was thought to be in the works, following the newly-struck deal between Open AI and Microsoft that dropped Azure’s right of first refusal, it should not only should be supportive of continued AWS revenue acceleration, but strikes at the bear thesis that AWS is fundamentally disadvantaged relative to peers in its infrastructure decisions, with Trainium focus limiting NVDIA supply and networking decisions being unable to manage next generation AI workloads.

In sum, while the AI ‘loser’ overhang continues to get lifted for AMZN, we expect there is still more to come for this narrative to roll off as it relates to incremental customer announcements / runway for AWS revenue growth acceleration given that today’s announcement barely scratches the surface on the potential for 15GW of capacity getting added to the AWS network in the next two years.”

Thus, Deutsche is talking about 15GW of capacity being added to AWS in the coming years, and according to Barclays (below), every GW of capacity is worth $20bn of annual revenue, or $10bn in the case of ASICs:

“If the basic math for AI points to every GW of GPUs being $20B of annual revenue and every GW of custom silicon being half that run rate, we think a significant amount of revenue may show up in ‘26 and ‘27 for AWS. Datacenterhawk shows 28+GWs of PPAs that AWS has options to build out could amount to hundreds of billions of annualized AI and cloud revenue down the road at full utilization.

The street has been overly hung up on short term AWS growth rates (admittedly a fair concern as Trainium/Rainier had yet to show its potential) but we think the past 48 hours have gone a long way to dispelling concerns. We’d also note that Anthropic’s position as the leading API provider by revenue in AI may allow AWS to see the halo effect into other enterprise customers beyond AI natives like Cursor.”

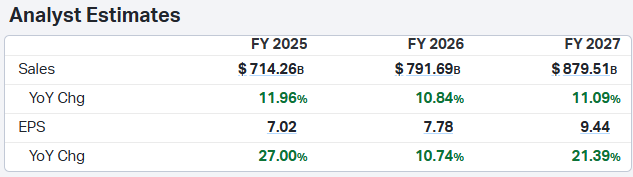

So, it sounds actually possible that AWS could add over $200 billion in AI revenues in the coming years, while AWS will be doing $127 billion in revenues this year. Thus, we’re looking potentially at large revenue acceleration in Amazon’s key business which is contributing 56% to its profits. Also, consensus is only modeling in revenue growth of $170 billion for the entire Amazon business over the coming two years:

While this leading cloud provider is trading at 33x forward EPS:

Overall, we like the setup in AMZN a lot. The company is one of the two dominant clouds for enterprises, there is clearly a lot of upside to consensus estimates, while the valuation is fairly attractive here for this high growth story. William Blair also notes that their next new business, satellite network Kuiper, can start contributing to earnings already next year:

“Amazon is trading at 13.4 times our out-year adjusted EBITDA estimate. We believe this is a compelling value given the competitive advantages of the Amazon platform across AWS and its other businesses. With the AWS business reaccelerating and many of the bear arguments being knocked down, we see an attractive setup from here as the company continues to execute. Outside of AWS, we also see potential for businesses like advertising, Kuiper, and others to be additive to profitability into 2026 and beyond, which would be upside to our estimates.”

We agree with these comments and see AMZN as one of the lowest risk ways to play the growth in AI. Even if AI momentum would stall at some point, the cloud will continue with strong growth anyways as AI is simply accelerating the cloud’s growth. This limits downside risk if there is a GPU supply overhang at some stage. Additionally, AMZN has further growth potential from higher margin businesses such as advertising and satellites. Overall, we think it is highly likely that long term investors will make attractive returns in this name and we’re happy to run a large position here.

Some analysts have been talking about how large clouds will get disrupted by neoclouds. However, these are comments from people who understand zero about cloud engineering. The simple reality is that enterprises can run all their workloads in the major clouds, as for example illustrated by Azure here – and this is only a glimpse of what’s available in major clouds:

Whereas neoclouds are simply portals to rent GPU capacity. So, most of neoclouds revenues actually come from deals with the major clouds as the major clouds are offloading their excess GPU demand. Customers don’t even know that they’re for example using CoreWeave, as they connect to Azure via API and then Azure sends these workloads to CoreWeave in the back. As enterprises’ workloads and data reside in the major clouds, these are also the obvious places to run AI workloads from a cloud engineering perspective. An important factor here is that egress fees are high, which means that transporting data out of the major clouds is both cost- and time-intensive.

Thus, we see neoclouds as data centers where excess demand is flowing to, simply as major public clouds can’t fulfill demand themselves. This can easily be witnessed in the series of deals being announced between major cloud providers and neoclouds over the last few months. It’s well possible that neoclouds can continue to be good investments if they’re able to attract sustainable inference or training workloads, however, these are much more speculative companies to invest in. Competition is pretty fierce while neoclouds offer little differentiation. On the other hand, when it comes to the major public clouds where you can run your enterprise workloads in, there are only three solutions: Amazon AWS, Microsoft Azure and Google Cloud Platform.

Thus, while in a strong AI cycle neoclouds such as CoreWeave can outperform, the risk profile is much different compared to the major clouds and personally, we wouldn’t run large positions in these neoclouds. If there is a GPU capacity overhang at some stage and once neoclouds’ contracts run out, these are businesses that could well get wiped out. We see neoclouds as excellent plays in the scenario where GPU capacity remains constrained compared to demand. In such a scenario, GPU pricing will remain attractive and the neoclouds will be able to pick up demand at attractive margins. Once the major clouds have sufficient GPU capacity, there is no reason anymore to send this excess traffic to the neoclouds. Rather, they can pocket this extra margin on acceleration workloads themselves.

For premium subscribers, we will dive further into developments in AI, names we’re adding to here and which names we’re taking profits in.