The Bull Case for Amazon & the Cloud, Data Center Semis, and AI Winners

This week's findings

The Bull Case for Amazon & the Cloud

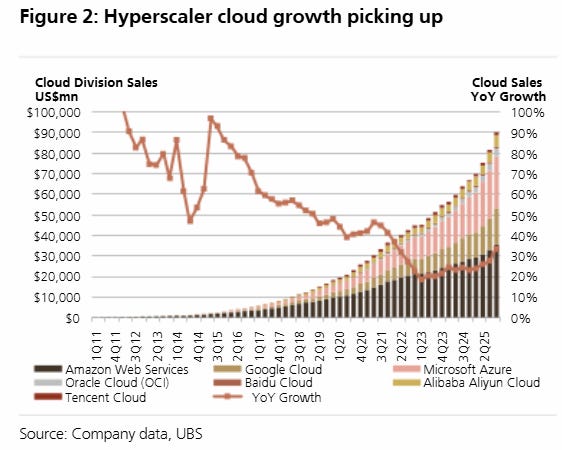

The three major clouds—Amazon AWS, Microsoft Azure, and Google Cloud Platform (GCP)—have been some of the most straightforward investment cases over the last decade. The breadth of web services and apps available on their platforms, their vast network of data centers around the world, and enterprise IT workloads being effectively locked in on these platforms due to high switching costs have resulted in some of the most formidable moats we’ve ever come across. As enterprises shifted workloads from capex-intensive on-premise data centers to on-demand consumption in the public cloud, it was easy for the big three clouds to capture that enormous growth:

With the explosion in token consumption—as AI finds ever more applications in the real economy—cloud growth rates are accelerating again. The major clouds are the logical place for enterprises to deploy their AI workloads, as this is where they run their apps and store their data. Moving data around is costly (data egress fees) and also time intensive. Due to the plethora of data centers the major clouds have available in each region, enterprises can create systems with ample backup compute and data. Basically, if you design your workflows well, you can have 100% uptime on the major clouds. Simply create a global load balancer with behind it VMs in multiple regions and then also a multi-regional database. All this can be easily created on each of these major clouds.

This is Satya explaining how AI consumption drives increased demand for all of Azure’s services:

“The number of customers spending $1 million plus per quarter on Foundry grew nearly 80%, driven by strong growth in every industry, and over 250 customers are on track to process over 1 trillion tokens on Foundry this year. And of course, Foundry remains a powerful on-ramp for the entire cloud. The vast majority of Foundry customers use additional Azure solutions like developer services, app services, and databases as they scale.”

Satya illustrated well how easy it is for the big clouds to launch massive new apps and cross-sell these to their customer base. Basically, Microsoft built a Databricks-clone that is now doing close to $2 billion in revenues already. Obviously, this business scaled much more quickly than either Databricks, Snowflake, or Palantir in their initial years, it’s not even close:

“Two years since it became broadly available, Fabric’s annual revenue run rate is now over $2 billion with over 31,000 customers, and it continues to be the fastest-growing analytics platform on the market with revenue up 60% year-over-year.”

On the other hand, neoclouds are mainly focused on pure GPU workloads with much less customer lock-in characteristics. They typically have a few data centers while being heavily geared with loads of debt to build these. So, we think that the quality of the business is much lower, combined with a highly risky financial profile due to a large current debt load already (e.g. CoreWeave) or a coming high debt load in the coming years as GPU capacity gets built out (e.g. Nebius). Neoclouds are also heavily reliant on megadeals with the big clouds, or other hyperscalers, which may or may not get renewed 4 years from now, further increasing the risk profile.

The financial profile is compelling in the major clouds on the other hand. Major clouds can finance their growth from highly attractive operating cash-flows. This makes these three assets—AWS, Azure and GCP—some of the best quality plays on the long term growth in AI consumption. We see Amazon as the best play on this growth story, due to the high contribution from AWS to Amazon group profits (already close to 60%), and this ratio will continue to increase as AWS will be the main growth driver in profits going forward.

A potential risk we see for AWS is that the company is heavily focused on its custom, Trainium-based AI stack whereas Nvidia is obviously innovating extremely rapidly in this space. So, if we were running AWS, we’d probably rely more heavily on Nvidia to drive AI revenues from AWS’ wide enterprise customer base. However, from the recent call, it seems that the Trainium-based model is working well and that AWS has all the necessary intermediary software layers ready. This means that LLMs can easily be deployed on their custom hardware and then those can be made available to customers over API.

Being cloud customers ourselves (heavily GCP focused), we don’t really care which underlying silicon is being used. As long as the price per million tokens is attractive enough and the speed with which those tokens arrive is sufficient, we don’t really care about anything else for inference as long as the model can do the job. We suspect that most enterprises will similarly look for these two criteria.

A weakness is that Amazon doesn’t have the most advanced models of ChatGPT or Gemini available, and so the best models of those families being exclusively available on Azure and GCP respectively will be an attractive feature for those clouds. However, Anthropic’s Claude currently leads in coding and is a very capable model overall, so Claude will likely be a key AI demand driver on AWS. Similarly, AWS also has the open-source models available such as DeepSeek, Qwen, Llama, etc. Overall, we do think that GCP is best positioned here with the exclusivity of Gemini being highly attractive, with at the same time also having Claude available, but GCP is only a small part of Google’s conglomerate (11% of EBIT last year). If we could access GCP as a pure play, we would probably have this one as our top pick.

That said, we do think that AWS has a sufficient breadth of capable models available and due to their massive share in the public cloud market, Amazon is well positioned to capture large growth from AI demand in the coming 3-5 years. Andy Jassy made a pretty compelling case why AWS is executing well on the recent call:

“AWS growth continued to accelerate to 24%, the fastest we’ve seen in 13 quarters. AWS is now a $142 billion annualized run rate business and our chips business, inclusive of Graviton and Trainium, is now over $10 billion in annual revenue run rate and growing triple-digit percentages year-over-year. Our backlog is $244 billion, that’s up 40% year-over-year and 22% quarter-over-quarter. And we have a lot of deals that are in the pipeline.

We’re continuing to see strong growth in core non-AI workloads as enterprises return to focusing on moving infrastructure from on-premises to the cloud. We’re adding significant EC2 core computing capacity each day, and the majority of that new compute is using our custom CPU, Graviton. Graviton is up to 40% more price performant than leading x86 processors and is used extensively by over 90% of AWS’ top 1,000 customers.

We consistently see customers wanting to run their AI workloads where the rest of their applications and data are. We’re also seeing that as customers run large AI workloads on AWS, they’re adding to their core AWS footprint as well. But the biggest reason that AWS continues to gain AI share is our uniquely broad top to bottom AI stack. In AI, we’re doing what we’ve always done in AWS, solving customer challenges. Customers are realizing as they get further into AI that they need choice as different models are better on different dimensions. Amazon Bedrock makes it easy to use these models to run inference securely, scalably, and performantly. Bedrock is now a multibillion-dollar annualized run rate business and customer spend grew 60% quarter-over-quarter. The second challenge is how to tune the model for your application—it takes a lot of work to post train and fine-tune a model for your application. Our SageMaker AI service, along with fine-tuning tools in Bedrock, make this much easier for customers.

Another challenge is cost. If we want AI to be used as expansively as companies want, we have to make the cost of inference lower. A significant impediment today is the cost of AI chips. Customers are starving for better price performance. It’s why we’ve built our own custom silicon Trainium, and it’s really taken off. We’ve landed over 1.4 million Trainium2 chips, our fastest ramping chip launch ever. Trainium2 is 30% to 40% more price performant than comparable GPUs and is a multibillion-dollar annualized revenue run rate business with 100,000-plus companies using it. Trainium is the majority of Bedrock usage today. We recently launched Trainium3, which is up to 40% more price performant than Trainium2, and we’re seeing very strong demand for Trainium3. We expect nearly all of our Trainium3 supply of chips to be committed by mid-2026. Although we’re still building Trainium4, we’re seeing very strong interest already.

Looking ahead, the primary way companies will get value from AI is with agents. Enterprises are apprehensive about deploying to production because these agents need to securely connect to compute, data, tools, and other elements. This is a new and hard problem where a solution has not existed until we launched Bedrock AgentCore. Agents can be fully autonomous, run persistently for hours or days, scale out quickly, and remember context. At this past AWS re:Invent, we launched Frontier Agents to do that, Kiro autonomous agents for coding tasks, AWS DevOps agents for detecting and resolving operational issues, and AWS security agents for proactively securing applications throughout the development life cycle.”

So, we think that with the arrival of AI agents our thesis above becomes even more compelling. Cloud growth rates will continue to accelerate as AI agents are XPU/GPU intensive, and as agents need access to data, apps and also CPUs, the major clouds are the obvious place to run these. This should also accelerate growth for names such as Snowflake and Databricks as these are hubs for data analysis where agents can be deployed.

Satya notes that agents can also lever Azure security roles, making it safer for enterprises to operate them as you can define what an agent can and can’t access in the cloud:

“We are also addressing agent building with Copilot Studio and Agent Builder. Over 80% of the Fortune 500 have active agents built using these low-code/no-code tools. As agents proliferate, every customer will need new ways to deploy, manage and protect them. We believe this creates a major new category and significant growth opportunity for us. This quarter, we introduced Agent 365, which makes it easy for organizations to extend their existing governance, identity, security and management to agents. That means the same controls they already use across Microsoft 365 and Azure, now extend to agents they build and deploy on our cloud or any other cloud. Partners like Adobe, Databricks, NVIDIA, SAP, ServiceNow, and Workday are already integrating Agent 365. We are the first provider to offer this type of agent control plane across clouds.”

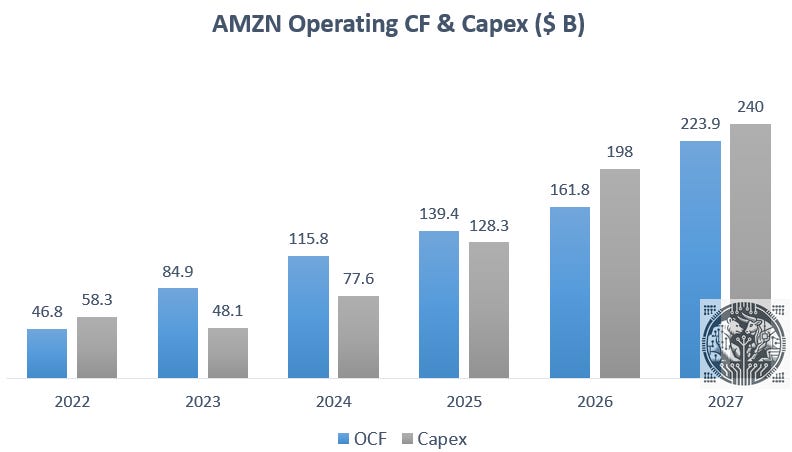

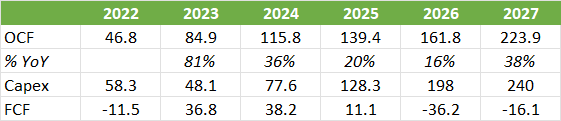

While the demand story in the coming years for the big clouds looks compelling, their share prices underperformed over the last year. What drove the recent sell off in Amazon’s shares was the capex outlook—with a heavy investment cycle, the company will go into negative FCF for the coming two years or so. However, the attraction is that most of this capex can get financed with operating cash-flow, limiting the cash burn:

So, the market is worried by a heavy investment cycle in Amazon for the foreseeable future, but we see the risk as limited due to the limited cash burn. If growth comes in less stronger than expected, capex can be dialed down again to unlock strong free cash flow. Vice versa, if growth continues to come in stronger than expected, rising capex can be financed with the growth in operating cash flow. So, negative FCF should already come down again in ‘27 on JP Morgan’s estimates:

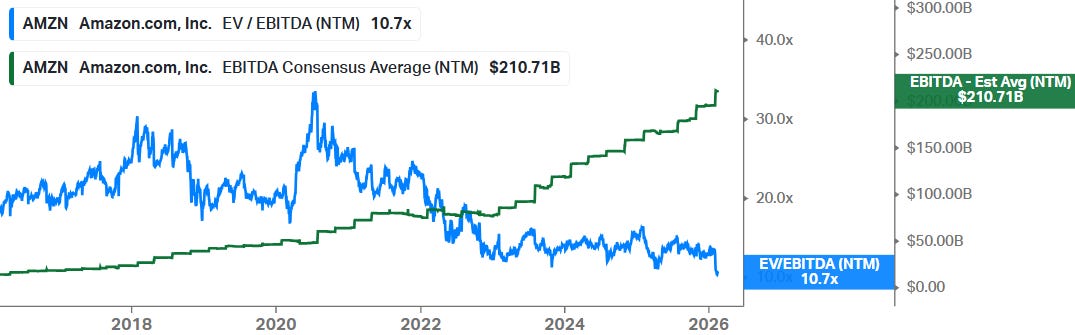

As Amazon now goes into a new investment cycle, we think it’s best to value the shares on a gross profitability measure, i.e. pre capex or depreciation, such as operating cash-flow or EBITDA. We see strong operating cash flow growth in the coming years (27% CAGR, table above) and similarly strong EBITDA growth (23.5% CAGR on consensus estimates in the coming three years). As Amazon is now trading on a trough EV/EBITDA valuation, this positions the shares to start capturing that profitability growth as little is priced in here:

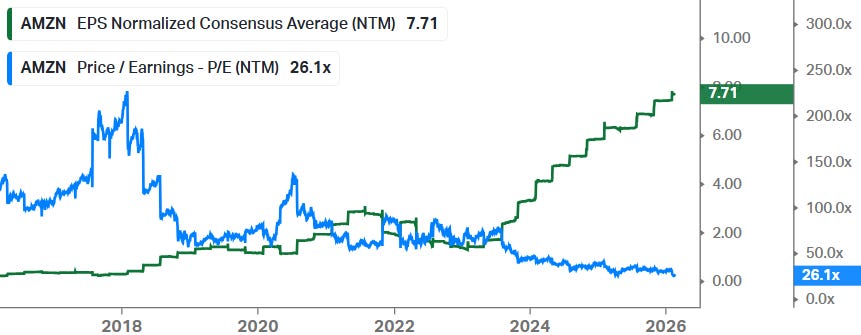

Similarly, also on a PE basis, Amazon is now at its cheapest valuation ever despite, in our view, a compelling growth profile:

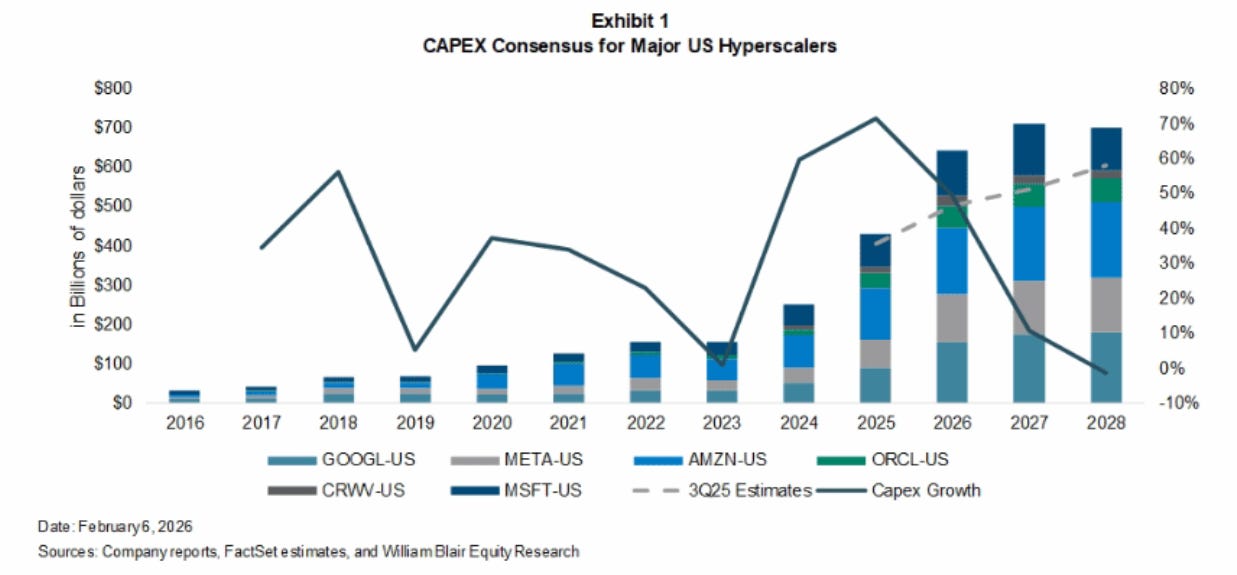

Whereas data center capex plays have been the biggest winners over the last few years, at some stage growth rates in annual data center capex will start to moderate. We see this as being the big catalyst which will drive outperformance for the major clouds, as the expansion in operating cash flow relative to more flattish capex will unlock steep growth in FCF.

So, AI capex plays need ever more capex every year to show growth, but this is not the case for the major clouds. Clouds can monetize their entire installed data center base via recurring revenues. As we discussed, pricing power is strong due to customer lock-in, while expanding the cloud platform with more apps and services creates a flywheel of growth. While major clouds haven’t performed well over the last year, we continue to see them as some of the most attractive plays on the long term growth in AI demand.

Deutsche had some entertaining comments following Amazon’s sell off and we agree with the big picture here:

“Amazon is not becoming more capital intensive, rather, we see the capex ramp as a pull forward of capital that would have been deployed in the cloud over many years to drive the digital transformation of the economy which is now happening much more quickly and in a narrower time frame. Cloud was always set to transform the % of GDP that flows through digital services and it’s why we believe the opportunity in the space measures in the trillions as the COGS of this transformation. Probabilistic thinking machines (AI) are simply accelerating that transformation and making it more tangible in the medium term. Amazon has spent the better part of the last 20 years watching AWS demand signals and converting that into capacity plans, there is no company with more data and experience to make this capacity growth decision in ‘26 and beyond. The risk of underinvesting is certainly higher than overinvesting as the latter would mean that the company would simply grow into the capacity over time, while wringing out efficiencies within the infrastructure. Akin to what we have seen the company achieve in the retail business since exiting the capacity boom initiated during the pandemic.”

A credible pushback on the thesis above is that a high proportion of cloud bookings is currently coming from AI labs such as OpenAI, which may or may not have the means to pay for these 3-4 years from now. However, we think this misses the big picture that AI is finding ever more applications to raise productivity. And so even if OpenAI blows up, it’s highly likely that OpenAI’s booked capacity will simply get taken up by Anthropic, Grok, or overall enterprise AI demand. We agree with Deutsche above that should there be an overbuild of AI capacity at some stage, capex can dramatically be lowered for a number of years so that demand can grow into the capacity available and drive high data center utilization rates.

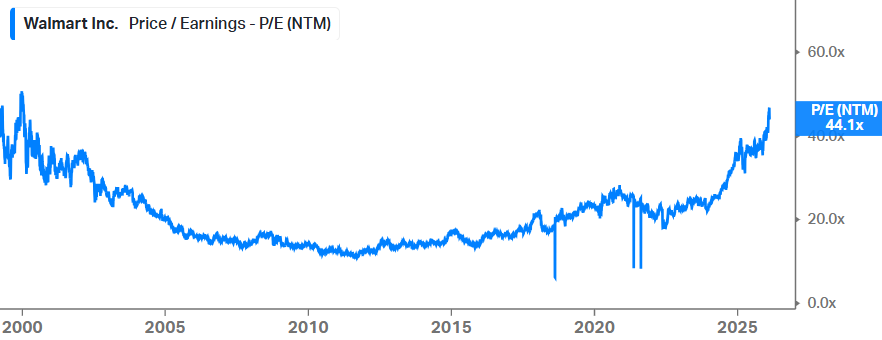

We think that the market is currently making a number of illogical moves. For example, some have pointed out that Amazon has been underperforming Walmart. However, Walmart’s outperformance has been driven by pure multiple re-rating, whereas Amazon’s EPS performance has been stellar but its multiples have gotten tremendously cheaper.

We think that the market has this completely wrong—Amazon should be trading on 45x and Walmart on 20x or so. Investors are now asked to pay an astronomic valuation for a company like Walmart with mediocre growth. Basically, the market is scrambling for safety in physical assets. Usually, when shares get absurdly expensive like this, subsequent returns are lackluster at best. Note that Walmart also traded on a value-multiple over the 2005-2015 period as the company ‘was going to get disrupted due to e-commerce’. Somewhat similar to the fears in many software names today.

Next, we will dive into semi stocks that should benefit from rising capex at the major clouds, and a number of other names where AI can drive meaningful upside in the coming years.