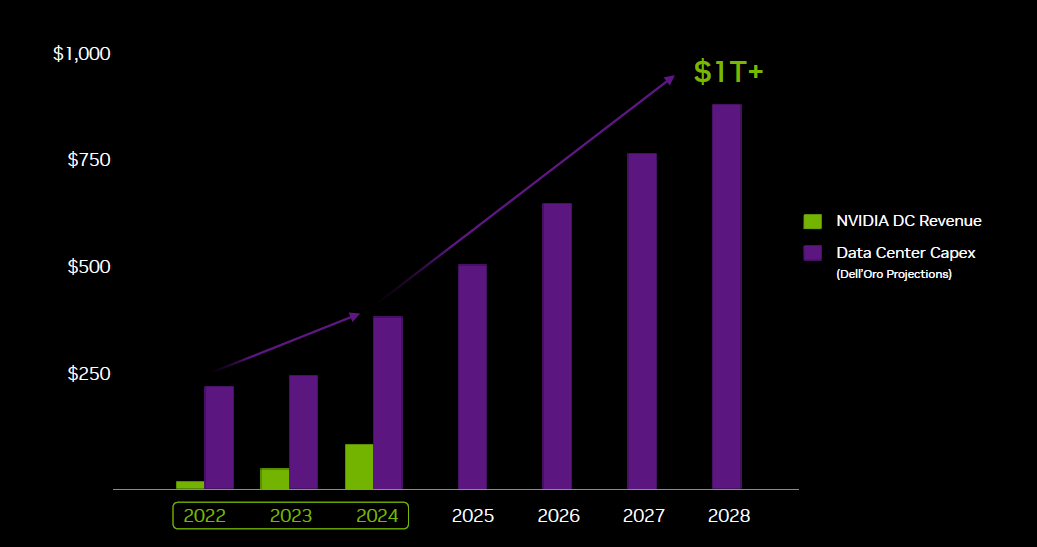

Nvidia’s current outlook remains strong with AI data center spend continuing to grow at a brisk pace:

This is Nvidia’s CFO on what’s driving increased spend in the AI data center:

“As we continue to deliver both Hopper and Blackwell GPUs, we are focusing on meeting the soaring global demand. This growth is fueled by capital expenditures from the cloud to enterprises, which are on track to invest $600 billion in data center infrastructure and compute this calendar year alone, nearly doubling in 2 years. We expect annual AI infrastructure investments to continue growing, driven by several factors: reasoning agentic AI requiring orders of magnitude more training and inference compute, global build-outs for sovereign AI, enterprise AI adoption, and the arrival of physical AI and robotics.

Blackwell has set the benchmark as it is the new standard for AI inference performance. The market for AI inference is expanding rapidly with reasoning and agentic AI gaining traction across industries. Blackwell's rack scale NVLink and CUDA full stack architecture addresses this by redefining the economics of inference. New NVFP4 4-bit precision and NVLink 72 on the GB300 platform delivers a 50x increase in energy efficiency per token compared to Hopper, enabling companies to monetize their compute at unprecedented scale. For instance, a $3 million investment in GB200 infrastructure can generate $30 million in token revenue, a 10x return.

Adoption of NVIDIA's robotics full stack platform is growing at a rapid rate with over 2 million developers, and 1,000-plus hardware and software applications taking our platform to market. Leading enterprises across industries have adopted Thor, including Agility Robotics, Amazon Robotics, Boston Dynamics, Caterpillar, Figure, Hexagon, Medtronic and Meta.”

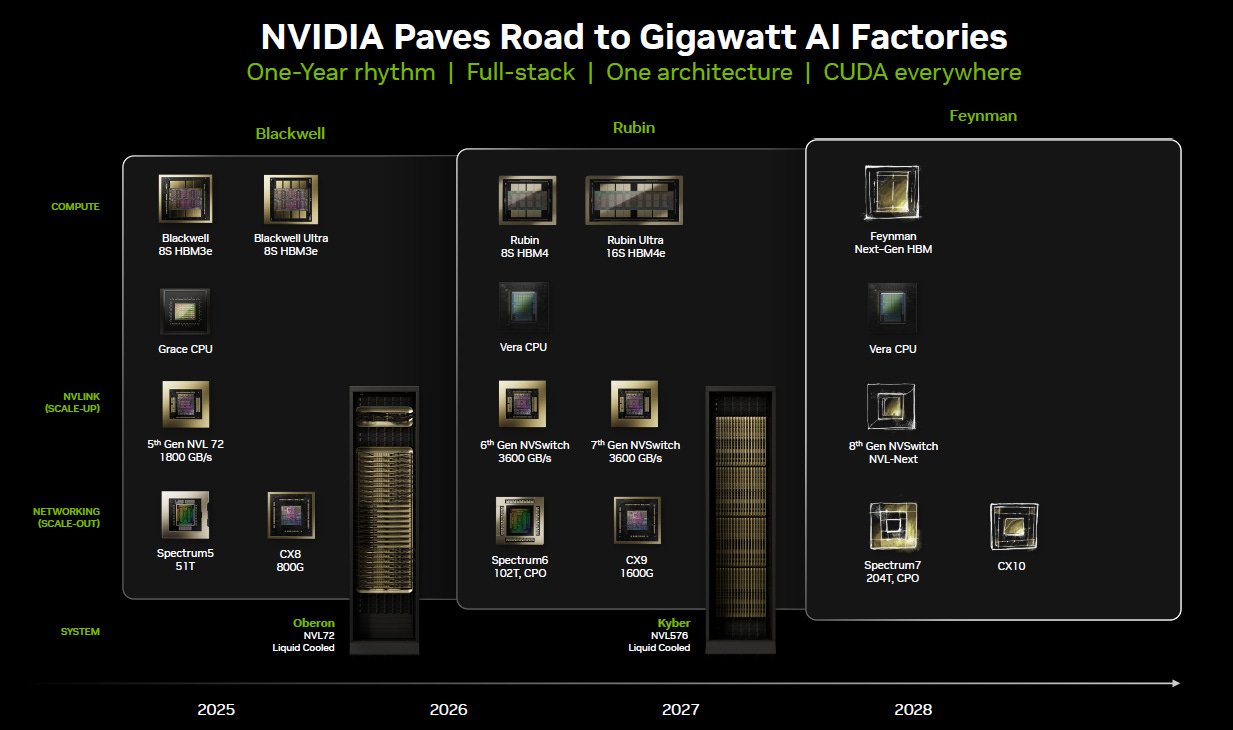

Nvidia will continue to see automatic ASP increases as next-gen products will be manufactured on more advanced nodes and with higher amounts of HBM:

There was some analysis circulating online that Nvidia missed with its data center revenues, however, this was caused by the halt in H20 sales to China where revenues declined $4 billion quarter on quarter. As we know, Jensen has been regularly talking to the Trump administration while understanding in Washington continues to grow of the importance of having the dominant AI stack (hardware + software). President Trump himself mentioned at a recent press conference that he would be happy to allow sales of a modified version of Blackwell into China. So, at this stage, it seems quite likely that billions of China revenues will be coming back in the near future. This is Nvidia’s CFO on the China situation:

“We have not included H20 in our Q3 outlook as we continue to work through geopolitical issues. If geopolitical issues subside, we should ship $2 billion to $5 billion in H20 revenue in Q3. And if we have more orders, we can bill more. We continue to advocate for the U.S. government to approve Blackwell for China. Our products are designed and sold for beneficial commercial use, and every license sale we make will benefit the U.S. economy and U.S. leadership. In highly competitive markets, we want to win the support of every developer. America's AI technology stack can be the world's standard if we race and compete globally.”

So while current quarterly growth rates took a dip due to H20 sales winding down, next quarter we will see already very brisk growth rates with revenues growing 17% sequentially, which don’t include China sales:

As Nvidia’s CFO expects they could ship $2 to $5 billion of H20s into China, there is a lot of upside to next quarter’s number. Consensus for next quarter is basically at $54 billion, the same amount where Nvidia’s CFO guided, but revenues could even come in above $60 billion if China sales are resumed, in combination with a beat on revenues ex-China.

As the Trump administration is currently negotiating a trade deal with China, H20 and future B30 sales could well be included as part of the agreement, with China agreeing to buy more US tech in order to reduce the US trade deficit – a big focus point of Trump. This is Jensen on Nvidia’s opportunity in China:

“The China market, I've estimated to be about $50 billion of opportunity for us this year if we were able to address it with competitive products. And if it's $50 billion this year, you would expect it to grow, say, 50% per year. As the rest of the world's AI market is growing as well. China is the second largest computing market in the world, and it is also the home of AI researchers. About 50% of the world's AI researchers are in China. The vast majority of the leading open source models are created in China. And so it's fairly important for the American technology companies to be able to address that market.

Open source is created in one country, but it's used all over the world. The open source models that have come out of China are really excellent. DeepSeek, of course, gained global notoriety. Qwen is excellent. There's a whole bunch of new models that are coming out. They're multimodal and they're great language models. And it's really fueled the adoption of AI in enterprises around the world because enterprises want to build their own custom proprietary software stacks. And so open source models are really important for enterprise, really important for SaaS who also would like to build proprietary systems, and it has been really incredible for robotics around the world.

We're talking to the administration about the importance of American companies to be able to address the Chinese market. H20 has been approved for companies that are not on the entities list, and many licenses have been approved. And so I think the opportunity for us to bring Blackwell to the China market is a real possibility. We just have to keep advocating the sensibility of and the importance of American tech companies to be able to lead and win the AI race, and help make the American tech stack the global standard.”

A bear thesis on Nvidia is that LLMs aren’t generating revenue. Jensen gave some interesting stats on the call that revenue growth is huge, with revenues up tenfold this year:

Altimeter did a similarly interesting analysis in late ‘24 how OpenAI has been growing revenue much faster than Google and Meta in their first years – obviously the latter two became two of the largest companies in world:

And since then, we know that token usage in the cloud has been really exploding in ‘25, with all hyperscalers such as Microsoft and Google seeing extremely high month-on-month growth rates.

Bears will argue that the cash burn is huge at OpenAI, but it seems likely to us that pricing can be increased much, much further. Currently users seem to be getting a near unlimited amount of analysis for around $20 per month, while obviously OpenAI could limit the amount of questions you can ask, or alternatively price an unlimited bundle at a much higher ASP. Currently, xAI’s Grok has started limiting usage to 50 questions every few hours.

There was some regular clickbait analysis in recent years on X that Nvidia is cooking the numbers, with as “evidence” that Nvidia’s Singapore revenues are unusually high. Nvidia’s CFO gave the following simple explanation:

“Singapore revenue represented 22% of second quarter's billed revenue as customers have centralized their invoicing in Singapore. Over 99% of data center compute revenue billed to Singapore was for U.S.-based customers.”

We’ve never paid much attention to this clickbait analysis as all obvious channel checks are pointing to demand being very strong – when Microsoft tells you that they need to sign multi-year contracts with CoreWeave because they can’t get their hands on sufficient GPU capacity, and with all other hyperscalers such as Google and Meta confirming the same, obviously Nvidia’s demand is real.

Nvidia’s challenge is that they need to sell more and more GPUs every year to be a growth company, which introduces obvious cyclical risks at some stage, something semi investors are hugely familiar with. As the current outlook remains very strong, with GPUs still being in short supply, we continue to happily hold our position, especially as also consensus numbers in the coming quarters look too low. Currently also valuation for Nvidia remains unexcessive at a 31x forward PE, a multiple where the company usually trades when the market thinks that EPS are peaking:

You don’t really run this cyclical risk in the hyperscalers. For example, Nvidia will be doing $210 billion in sales this year, if its growth rate would halt or slightly decline at some stage in the coming years, the public clouds would still be adding similar amounts of GPU capacity like they were doing before. These bring in extra revenues they can make from renting these GPUs out in their clouds. So, under that scenario, Nvidia has gone ex-growth for the time being while the public cloud continues to grow at an interesting pace. Therefore we continue to see Microsoft as the lowest risk play to get AI exposure. The company is extremely well positioned with Azure to keep growing at high rates for the coming 5-10 years and possibly much longer, while still trading on a reasonable multiple. This is one of the best positioned businesses in the world and it is fairly non-cyclical, two factors which typically warrant a premium multiple anyways:

For premium subscribers, we will dive into a niche, hardcore semi engineering company that is seeing even much higher growth rates from the AI data center than Nvidia. For one of its key products, dollar semi content per accelerator will go up tenfold.