During the Q3 earnings call, TSMC put a lot of emphasis on how AI demand continues to come in stronger than expected, but also how the company will remain disciplined in their capacity planning. However, with all the fab capacity they’ve been announcing, it’s clear that their spending plans are big. The main aim of this focus on discipline is that they want to reassure seasoned investors – who all tend to have scars of just how brutal things can get in a downturn – that they see the risk of a capacity overhang as being limited. Leading edge semi manufacturing is an extremely capital intensive business, and high fab utilizations are key to generating high margins and positive free cash flows. This is CEO Wei on the demand:

“The AI demand actually continues to be very strong, it’s more stronger than we thought 3 months ago. So, we have talked to customers and then we talk to customers’ customer. The CAGR we previously announced is about mid-40s. It’s still a little bit better than that. We will update you probably in beginning of next year, so we have a more clear picture. Today, the numbers are insane.

Talking about the CoWoS capacity, we are working very hard to narrow the gap between the demand and supply. We are still working to increase the capacity in 2026. The real number, we probably update you next year. Today, all I want to say about the everything AI-related, like front-end and back-end capacity, is very tight. We are working very hard to make sure that the gap will be narrow. Right now, we are working with one OSAT, a big company and our good partner, and they are going to build their fab in Arizona. We are working with them because they’re already breaking ground, and the schedule is earlier than TSMC’s two advanced packaging fabs. Our main purpose is to support our customer in the U.S.

If the China market is not available, I still think the AI’s growth will be very dramatic, very positive. I have confidence in my customers, both in GPU or in ASIC, they are all performing well. And so, they will continue to grow, and we will support them. I believe we are just in the early stage of the AI application. So, very hard to make the right forecast at this moment. We pay a lot of attention to our customers’ customer. We discuss with them and look at their application, be it in the search engine or in social media, and then we make a judgment about how AI is going to grow. This is quite different as compared with before, where we only talked to our customers and have an internal study.

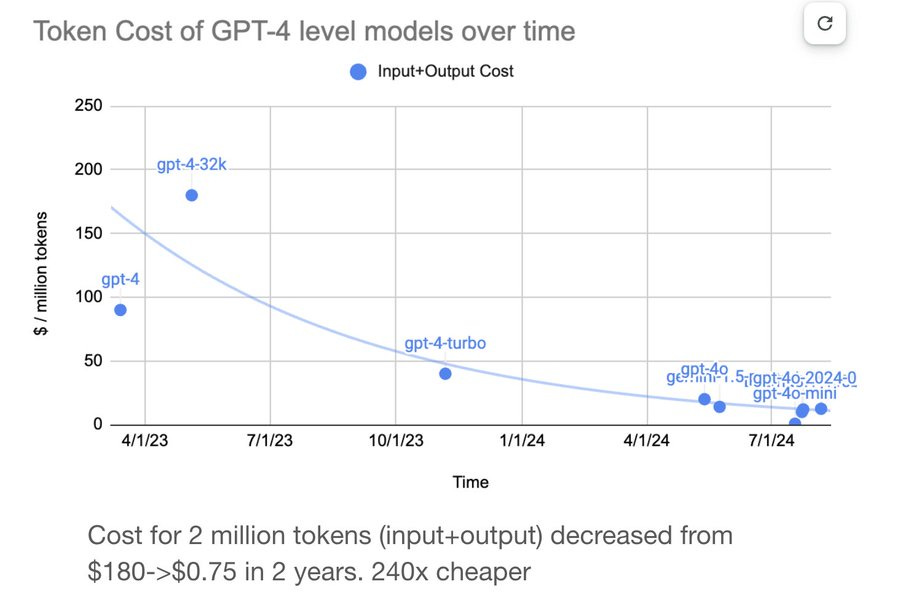

The number of tokens increase is exponential and I believe that almost every 3 months, it will exponentially increase. And that’s why we are still very comfortable that the demand on leading edge semiconductor is real. The tokens increase is much, much higher than TSMC’s CAGR we forecasted. Our technology continues to improve and so our customers moving from one node to the next node, they can handle much more tokens. And that’s why we say that we have about a 40-45% CAGR.”

Obviously, a big factor why token growth is much higher than TSMC’s forecasted CAGR is all the algorithmic improvements LLM providers are realizing:

Along with their bullish outlook on AI, TSMC spent a lot of time talking about investment discipline. Below is CC Wei emphasizing once again the company’s discipline, although at the same time he mentions that they are securing a second large piece of land in Arizona to build out a giga fab cluster:

“As the world’s most reliable and effective capacity provider, we will continue to work closely with our customers to invest in leading edge specialty and advanced packaging technologies to support their growth. We will also remain disciplined and thorough in our capacity planning approach to ensure we deliver profitable growth for our shareholders. With a strong collaboration and support from our leading U.S. customers and the U.S. federal, state and city governments, we continue to speed up our capacity expansion in Arizona. We are making tangible progress and executing well to our plan. In addition, we are preparing to upgrade our technologies faster to a more advanced process technologies in Arizona, given the strong AI-related demand from our customers.

Furthermore, we are close to securing a second large piece of land nearby to support our current expansion plans and provide more flexibility in response to the very strong multiyear AI-related demand. Our plan will enable TSMC to scale up through an independent giga fab cluster in Arizona to support the needs of our leading-edge customers in smartphone, AI and HPC applications. In Taiwan, with support from the Taiwan government, we are preparing for multiple phases of 2-nanometer fab in both Hsinchu and Kaohsiung Science Parks. We will continue to invest in leading edge and advanced packaging facilities in Taiwan over the next several years.”

It’s clear that demand for N2 is going to be massive. Historically, leading edge semi demand was driven by Apple’s latest iPhone and all other advanced semi designers – such as Nvidia – were one or two nodes behind Apple. However, due to the explosion in AI demand, it now makes sense for the various players competing in AI acceleration to make the additional investments and move to the leading edge node more swiftly. This will obviously be a big positive for TSMC, as leading edge wafers come at much higher ASPs while also a faster ramp of their latest node boosts margins. This is TSMC’s CFO on how N2 will be very profitable and also margin dilution due to a new node ramp is becoming much less of an issue:

“Sunny, let me share with you, N2’s structural profitability is better than the N3. Secondly, it’s less meaningful nowadays to talk about how long it will take for a new node to reach to a corporate average in terms of profitability. And that’s because the corporate profitability, the corporate gross margin moves and generally, it has been moving upwards. So less meaningful to talk about that, okay?”

CC Wei commented how TSMC will be ramping N2 fast due to high-performance computing (AI) demand:

“Finally, let me talk about our end-to-end A16 status. Our 2-nanometer and A16 technologies lead the industry in addressing sizable demand for energy-efficient computing, and almost all innovators are working with TSMC. N2 is well on track for volume production later this quarter. With full year, we expect a faster ramp in 2026, fueled by both smartphone and HPC AI applications. With our strategy of continuous enhancement, we also introduced N2P as an extension of our N2 family. N2P feature further performance and power benefits on top of N2 and volume production is scheduled for second half ‘26. We also introduced A16 featuring our best-in-class Super Power Rail or SPR. A16 is best suited for specific HPC product with compressed signal routes and dense power delivery networks. Volume production is on track for second half ‘26. We believe N2, N2P, A16 and its derivatives will propel our N2 family to be another large and long-lasting node for TSMC.”

The below chart shows how the various nodes have long life times for TSMC, while more advanced nodes really bring in the revenue growth:

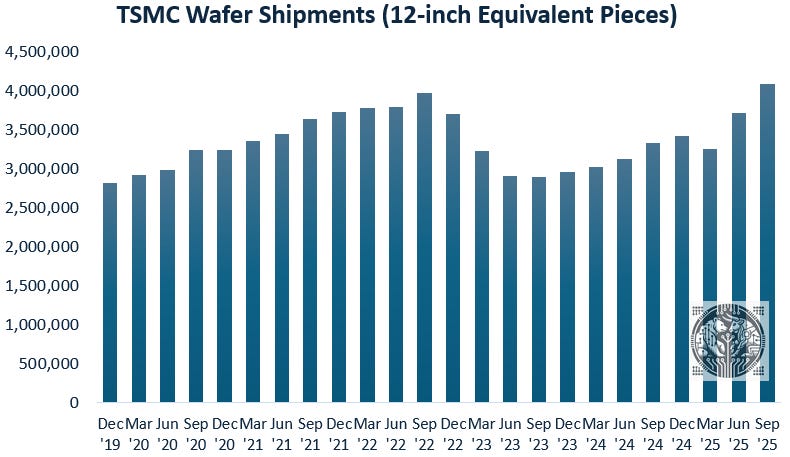

As advanced capacity comes at higher ASPs, TSMC benefits both from wafer volume growth and increased pricing over time:

Looking at recent quarters, we can see that in the June and September quarters, wafer shipments are now picking up strongly:

For premium subscribers, we will dive further into current developments in leading edge semis, TSMC, and ASML; and which name of the two should be the best performer in the coming years.