An Under-the-Radar Leader in the Biotech Value Chain

+ AI on Fire with Cadence, Microsoft & Meta

Cadence, Microsoft & Meta on Fire

Three of the best positioned companies in the AI value chain—Cadence, Microsoft and Meta—produced stellar results this week. What stood out from Cadence’s conference call is that the company continues to increase semi design workflows with AI automation. This is the CEO on the call:

“Our core EDA revenue grew 16% year-over-year in Q2. Further proliferation of our digital full flow at the most advanced nodes continued, and more than 50% of advanced nodes designs using our implementation solutions are now using Cadence Cerebrus. In Q2, we launched Cadence Cerebrus AI Studio, the industry's first Agentic AI, multi-block and multiuser SoC design platform. This technology is delivering up to 20% PPA improvement while accelerating chip delivery time by 5x to 10x and was endorsed by Samsung and STMicroelectronics at launch. Renesas successfully used our Pegasus physical verification solution to sign off an advanced node SoC after it demonstrated a significant throughput advantage.

More than 50% of our designs are already using Cerebrus which is classical AI. But now with Cerebrus AI Studio, it's a whole workflow. It's an agentic AI solution instead of just doing block implementation—it does floor planning, timing closure, … So what typically a designer could do in like a 3 million to 5 million instance design, now they could do 30 million to 50 million instances. So it's a massive productivity and PPA benefit as the AI does more of the manual work that was manual in the past. Then there is verification and RTL writing. This whole notion of LLMs generating and reasoning code is a big thing, not just in software development with C/C++, but also in chip design and RTL. So those two areas are very, very positive. One is in the front end with RTL generation and verification, and the other is in the back end in PPA optimization.

So we are focused on innovation and productivity, and we have all kinds of business models to monetize that in any way, and we'll see how that progresses over time. In EDA, we have already provided over the years a massive level of automation. Now it was not because of AI, it was because of classical methods. If I look at like 20 years ago to now, EDA productivity has gone up by like 100x. What used to take 500 people and five years to design, will now take 50 people one year to design. Our users are already used to a lot of automation.”

It’s likely that a big driver for Cadence’s strong results is that a lot of AI accelerator designs will be moving to more advanced nodes as well as 2.5 and 3.5 packaging, creating much bigger systems that have to be designed and simulated. This is Cadence’s CEO on how both Moore’s Law and 3D-IC will be tailwinds for them in the future:

“The workload of our customers is going up exponentially because of Moore's Law and 3D-IC. In chip design, by 2030, the chips will be 1 trillion transistors. Right now, they're 100 billion to 200 billion transistors. Then you add all the software and you add the new architecture, the workload will go up by 30, 40x in the next five years. There's not even enough talent to hire to meet that requirement of 30x. I think the headcount in our customers' R&D will go up maybe by 2x, 3x. But the remaining gap of 10x in productivity has to be made up with more automation. And customers are willing to invest in agentic AI and more compute to balance that because there's not even that many engineers you can hire.

This is only in the beginning. So Moore's Law, first of all, is going to go to at least 1 nanometer. We are at 3, then 2, 1.41, 1, that's 10 years, and I visited some of our research partners like Imec and they're planning Moore's Law to go until 2042 with new transistor structures. But at least for the next 10 years, I see Moore's Law being strong. Then this 3D-IC and heterogeneous integration provide orthogonal levels of integration. And if you look at TSMC and other road maps, they have very aggressive road maps to be able to put more and more chips in a package. So we are pushing on both of these dimensions.”

IP was particularly strong for Cadence, this is the CEO on IP:

“I am much more optimistic in IP than I was two or three years ago, and there are multiple reasons for that. One, we are investing more in IP now because our EDA position is very strong. For years, we invested in EDA and IP, historically, we didn't invest as much. But things have changed. This emergence of chiplet-based architectures provides more opportunities for IP. The emergence of multiple advanced node foundries, there are at least four major ones now, provides more opportunities for IP. And our portfolio has also improved with some good M&A, we got HBM4 from Rambus and there are several others over the last few years. So I feel now we have crossed like a critical mass for IP to be a good business for us. I do expect in the longer term that IP can grow faster than Cadence on average, but it will have slightly lower margins than EDA.”

The other great company that had a stellar quarter was Microsoft, where Azure constant currency growth came in 39%:

AWS has been lagging, especially in recent years, due to their focus on Trainium accelerators. Nvidia has been making sure that clouds which are fully committed to the Nvidia ecosystem have access to the latest GPUs, and this why Microsoft closed a deal with CoreWeave to integrate both companies’ data center networks. This way, AI workloads from Azure customers can be offloaded to CoreWeave GPUs when Microsoft is in short supply (which is practically always). This all happens behind the scenes and customers are not even aware they use CoreWeave, as they simply call Azure APIs from their software. This is Nadella on the current explosion in AI workloads:

“The rate of innovation and the speed of diffusion is unlike anything we have seen. To that end, we are building the most comprehensive suite of AI products and tech stack at massive scale. Azure surpassed $75 billion in annual revenue, up 34%, driven by growth across all workloads. We continue to lead the AI infrastructure wave and took share every quarter this year. We opened new DCs across 6 continents and now have over 400 data centers across 70 regions, more than any other cloud provider. There is a lot of talk in the industry about building the first gigawatt and multi-gigawatt data centers. We stood up more than 2 gigawatts of new capacity over the past 12 months alone. And we continue to scale our own data center capacity faster than any other competitor.

Every Azure region is now AI-first. All of our regions can now support liquid cooling, increasing the fungibility and the flexibility of our fleet. And we are driving and riding a set of compounding S curves across silicon, systems and models to continuously improve efficiency and performance for our customers. For example, GPT4o family of models, which have the highest volume of inference tokens, through software optimizations alone we are delivering 90% more tokens for the same GPU compared to a year ago.

The next layer is data, which is foundational to every AI application. Microsoft Fabric is becoming the complete data and analytics platform for the AI era, spanning everything from SQL to NoSQL and analytics workloads. It continues to gain momentum with revenue up 55% year-over-year and over 25,000 customers. It's the fastest-growing database product in our history. Fabric OneLake spans all databases and clouds, including semantic models from Power BI, and therefore it is the best source of knowledge and grounding for AI applications and context engineering. Azure Databricks and Snowflake on Azure both accelerated as well. Cosmos DB and Azure PostgreSQL are both powering mission-critical workloads at scale. OpenAI, for example, uses Cosmos DB in the hot path of every ChatGPT interaction, storing chat history, user profiles and conversational state. And Azure PostgreSQL stores metadata critical to the operation of ChatGPT as well as OpenAI's developer APIs.

This year, we launched Azure AI Foundry to help customers design, customize and manage AI applications and agents at scale. Foundry features best-in-class tooling, management, observability and built-in controls for trustworthy AI. Customers increasingly want to use multiple AI models to meet their specific performance, cost and use case requirements. And with Foundry, they can provision inferencing throughput once and apply it across more models than any other hyperscaler, including models from OpenAI, DeepSeek, Meta, xAI's Grok and, very soon, Black Forest Labs and Mistral AI. 80% of Fortune 500 already use Foundry and when we look narrowly at just the number of tokens served by Foundry APIs, we processed over 500 trillion this year, up over 7x.”

Finally, we have Meta, where AI is an obvious driver to show better ads and content feeds on their platforms, increasing ad engagement and time spent within their apps. In addition, Zuck is also investing heavily for Meta to become the leading player in AI model development, with both big investments in talent and massive GPU clusters such as a future 5GW one, Hyperion:

“I've spent a lot of time building this team this quarter. And the reason that so many people are excited to join is because Meta has all of the ingredients that are required to build leading models and deliver them to billions of people. The people who are joining us are going to have access to unparalleled compute as we build out several multi-gigawatt clusters. Our Prometheus cluster is coming online next year, and we think it's going to be the world's first gigawatt-plus cluster. We're also building out Hyperion, which will be able to scale up to 5 gigawatts over several years, and we have multiple more titan clusters in development as well. We are making all these investments because we have conviction that superintelligence is going to improve every aspect of what we do.”

We’ve been hearing stories that tech is in a bubble for close to 10 years now. It’s a simple reality that the stocks we discussed above are some of the best positioned companies in the word with wide moats and structurally high top line growth. For producing 15% top line growth this year, 34x for Microsoft is not a stretch. And the company will be growing at attractive double-digit rates for a long time. We would argue that Azure is probably the best business in the world. So yes, valuation is not a steal, but Microsoft remains a good place to invest money in for the long term.

In our view, these are very low risk returns:

Cadence is a similar story. Valuation is not cheap, but you get an extremely good business in return, with exposure to attractive growth rates due to the race in leading edge semi design (including ASICs), AI automation and IP. So again, we think this remains a good place to invest money long-term.

We’ve had a position in this stock for a long time and it just keeps accumulating:

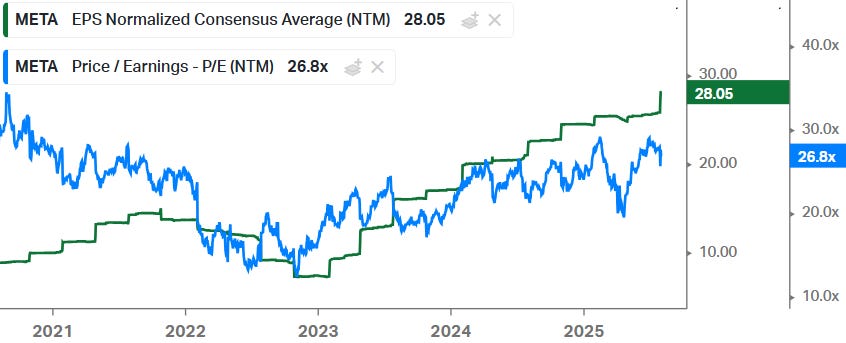

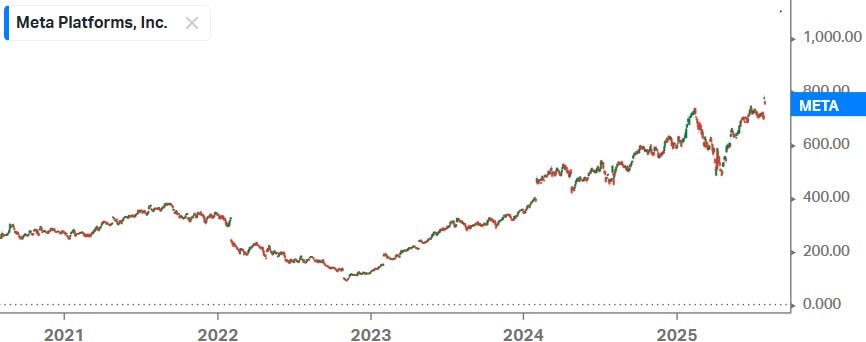

Finally, Meta, we are continuing to happily hold these shares as well. 27x PE for a company which is currently growing its top line at close to 20%:

So, we think that the above names will continue to be good and fairly low risk investments for the long term—the main risk is a macro downturn at some stage which will compress valuations for one or two years. However, the above stories are well understood by a large group of investors. Next, for premium subscribers, we will dive into a smaller name in the biotech value chain that’s in the earlier stages of its growth runway. The company is already profitable, has an interesting competitive advantage and a dominant market share in its niche, and is generating high levels of growth. It’s also not a name we’ve ever seen mentioned on social media platforms such as X or in financial media.