AI Winners & Losers

Stock Picks in Tech

The biggest news this week was the new partnership between Nvidia and Intel, where Nvidia’s Client GPUs will be integrated into Intel’s Client CPUs and vice versa, with Intel’s data center CPUs being integrated into the Nvidia NVLink ecosystem. This is a smart deal that’s a win-win for both. Intel still dominates in client CPUs (PCs + Notebooks), and now it becomes easier for Nvidia to access this large market ($31 billion in Intel Client revenues). Previously, Nvidia was only addressing the gaming portion of the client market with its standalone GPUs. The nice growth angle here is that with this new deal, Nvidia is extremely strongly positioned to capture the AI PC market. Thanks to its CUDA computing platform, Nvidia GPUs already provide the strongest platform for on-device AI development, and now the company is further strengthening its position by integrating its platform with the world’s dominant Client CPU.

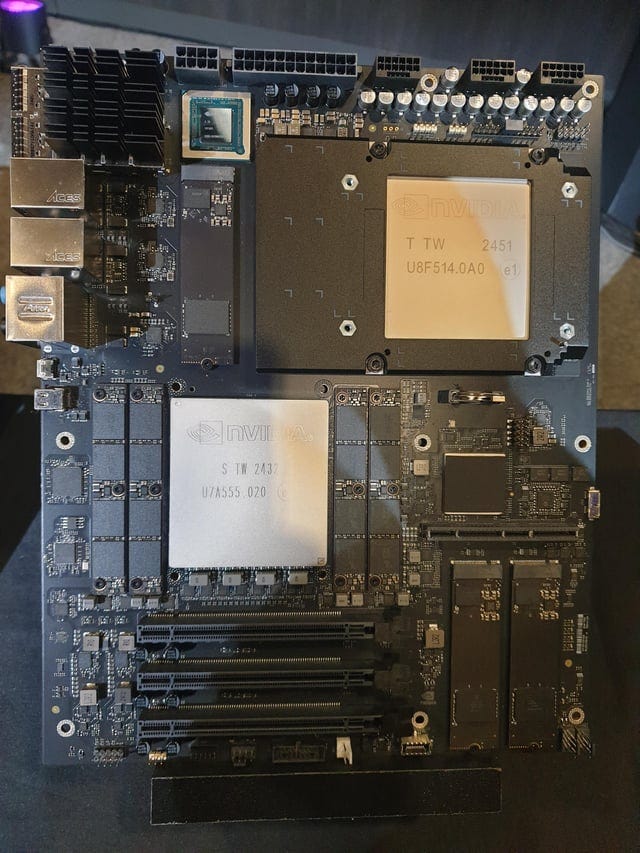

At the same time, Intel has been struggling with share losses in the data center CPU market, most notably to AMD but also to ARM-based CPUs in the public cloud. With this deal, the company can now start participating in the booming AI data center market and especially in inference. Nvidia is integrating CPUs into its accelerator boards such as the GB300 to reduce overhead. The GB300 is specifically designed for reasoning workloads, and these inference-type workloads will likely enjoy the highest growth CAGR in the AI data center for the foreseeable future.

In addition, Nvidia will invest $5 billion into Intel via newly issued Intel shares. Intel is currently burning $10 billion of cash every 12 months while its balance sheet is already highly levered. So, all this fresh capital that the company has successfully raised over the last few months are welcome additions to their balance sheet. Intel has now sufficient capital to try to turn around its fortunes for the foreseeable future. It’s possible that Jensen sees this as a strategic investment to keep another leading foundry in the race so that Nvidia doesn’t become fully dependent on TSMC. Nvidia has also used Samsung Foundry in the past, for example for its gaming GPUs. If both Samsung and Intel would have to exit the race in leading edge semi manufacturing at some stage, TSMC will gradually start moving its gross margins up to 60-70% and then Nvidia might have to shrink its current gross margin of 70-75% to make room. Note that the $5 billion investment is pocket change for Jensen as Nvidia will generate $100 billion in free cash flow this year.

Intel’s EPS have evaporated, and as a result its forward PE is now 140x:

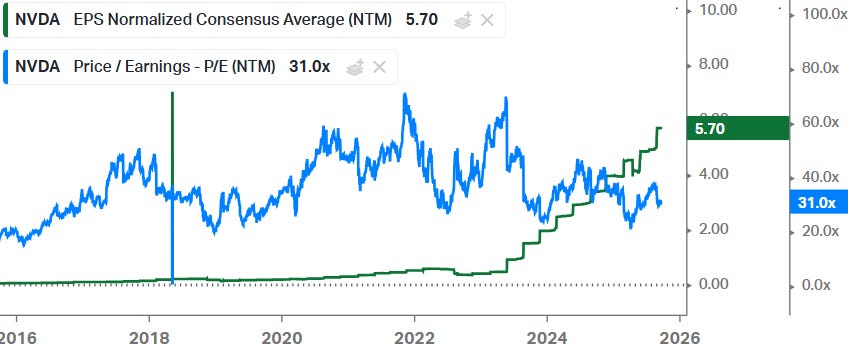

At Nvidia we get the reverse image, EPS continue to explode, while its forward PE is in line with history:

Despite a narrative that software will get disrupted by AI, the best names in software typically enjoy high switching costs for their customers while besides being a threat, AI also represents a new opportunity to monetize further workloads. ServiceNow is a good example here, and Goldman recently caught up with the company:

“On the narrative that AI could erode seat-based models, ServiceNow highlighted its hybrid pricing approach that blends both seat-based subscriptions with usage-based AI monetization. Management noted that the company has observed no seat dilution thus far, and at the same time, it has applied an AI premium to the platform and pricing strategy with a 30% uplift in pricing through token-allotment for AI agents. As agentic AI proliferates across the enterprise over time, ServiceNow sees a path that tokens are consumed at a faster rate and the “reload” of these tokens can become an additive revenue stream. Management noted the company has seen minimal friction from customers following the 30% AI-premium pricing uplift, as evidenced by the +50% QoQ growth in Now Assist.

With the recent Zurich release, ServiceNow added 1,200 new agentic AI capabilities, reinforcing the vision for the platform to be the AI control tower that orchestrates agents across heterogeneous systems. The platform’s open posture—integrating with any major cloud, LLM, and enterprise data source—allows customers to unify existing technology stacks while automating end-to-end processes. This strategy is reinforced by ServiceNow’s operational data advantage: approximately 65B workflows and 1T transactions in-flight provide rich, context-rich data that improves grounding, intent understanding, and continuous agent learning. Management notes that AI agents already operate a significant portion of internal workflows, with ~90% of IT, HR, and sales cases handled by agents today, signaling execution towards the company’s long-term AI strategy.”

ServiceNow is a platform to organize workflows in companies. The risk would be that AI can resolve more workflows on its own in the future, however, even then the AI would still require the necessary approvals. Letting AI agents loose inside an enterprise’s digital infrastructure obviously carries too much risk. Thus, AI agents will have to operate within set boundaries, with clear limitations of data they can access and changes they’re allowed to make. Any significant changes likely will also have to be reviewed by a human first for approval. Therefore, a software to organize all workflows and that can also keep a central audit trail remains necessary. ServiceNow has been a train (chart below), and we don’t see AI at this stage as being a disruptor that’s going to derail this story. More on the contrary, where deploying AI agents within ServiceNow provides a large new opportunity.

For premium subscribers, we will dive further into AI winners and losers.