AI Outlook & Stock Picks

A tour of developments in AI

In the last few months, OpenAI has announced deals with all major accelerator providers, both GPU and ASIC i.e. Nvidia, Broadcom, AMD and Google (for Google’s Cloud Platform, where they could leverage TPUs). OpenAI’s long term aim is to take a hardware agnostic approach to AI model deployment, allowing the company to deploy its LLMs on multiple hardware platforms. We’ve written extensively about software stacks in the past, and this is exactly the reason that OpenAI is able to do this.

The company is working on Triton, an open source compiler to optimize AI algorithms in a Python-like language, to then deploy the code on a variety of accelerators. So, the key goal is that CUDA, ROCm or ASIC intrinsics won’t be required knowledge anymore for AI engineers. A recent ByteDance paper illustrates this idea, with the group of engineers deploying the same Triton codebase on both Nvidia and AMD GPU clusters:

“In this report, we propose Triton-distributed, an extension of existing Triton compiler, to overcome the programming challenges in distributed AI systems. Triton-distributed is the first compiler that supports native overlapping optimizations for distributed AI workloads, providing a good coverage of existing optimizations from different frameworks. First, we integrate communication primitives compliant with the OpenSHMEM standard into the compiler. This enables programmers to utilize these primitives with a higher-level Python programming model. … Finally, we showcase the performance of the code generated by our compiler. In a test environment with up to 64 devices, our compiler can fully utilize heterogeneous communication and computation resources to provide effective overlapping and high performance. In many cases, the performance of the generated code can even outperform hand-optimized code. Moreover, the development difficulty and the time cost for development using our compiler are far less than those of low-level programming such as CUDA/C++, which clearly demonstrates significant productivity advantages.”

The idea of hardware abstraction to deploy code seamlessly on a variety of underlying hardware is nothing new. This is exactly the setup that powers hyperscalers and the public cloud. Write an app, deploy it in a container (a mini virtual environment), and then the container runtime will deploy the code on available hardware. So, the time that you had to write app code for any particular OS or CPU is over. Kubernetes is the main container runtime and was open sourced by Google in 2014, based on their internal system called ‘Borg’. This allowed Amazon AWS to shift customer workloads to their own internal ARM-based CPUs and away from x86-based silicon like from Intel and AMD.

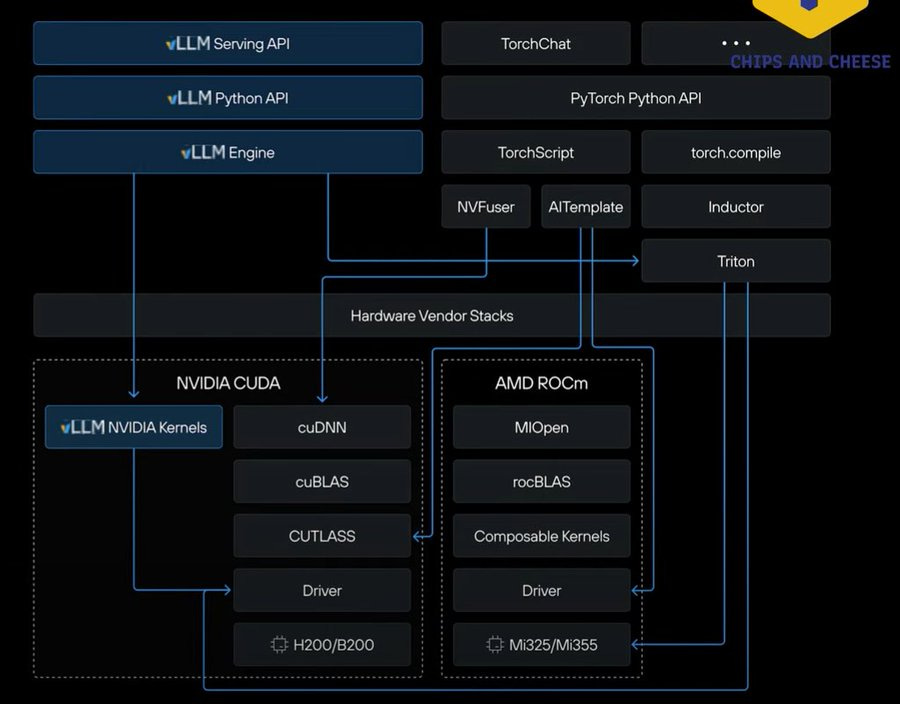

In the world of AI, an increasing amount of hardware abstraction is similarly emerging. The highest level language is Pytorch (a Python-based AI library) and then Triton is a Python-like mid-level platform to further customize AI algorithms. Both are gradually becoming more sophisticated and leverage underlying vendor-specific computing platforms such as Nvidia’s CUDA and AMD’s ROCm:

This is exactly the reason why AMD has been working so hard on ROCm. The goal is to integrate seamlessly with higher level Pytorch and Triton platforms, which most AI engineers prefer to work in. We fully agree with the remarks from the Chinese ByteDance engineers above that C++ (CUDA’s language) can be a pain to work in, and so if one can write AI code in Python (either Pytorch or Triton) instead, this becomes a no-brainer.

Below are the notes from the Evercore analyst, which caught up with AMD’s management a few months ago. It highlights the progress the company has been making on ROCm:

“AMD’s aspiration is to make ROCm the industry’s Open-Source stack of choice. It has ramped investment into ROCm adding ~800 software engineers last year through M&A and hiring for a total of several thousand employees. AMD has increased the software release cadence of ROCm to 2x/month recently vs 1x/quarter previously. AMD made the case that ROCm’s improvement can be seen in its success in getting AI models to be performant out of the box on Day 1. AMD has focused its development resources on increasing the number of working models by working with hyperscalers. AMD management believes that AI workloads continue to evolve at extraordinary speed and that programmable merchant GPUs will account for the majority of AI compute share, due to their flexibility. AMD expects ASICs to co-exist with GPUs, largely targeting more stable algorithms. Consistent with our checks, AMD believes customers want a sustainable GPU competitor with scale.”

So, the goal here is that all AI algorithms can be smoothly deployed on AMD hardware, and that higher level platforms can make the necessary API calls to ROCm which takes care of all the low level plumbing. AMD’s CEO, Lisa Su, talked about the importance of these software stacks on this week’s analyst call:

“AI is the most transformative technology of the last 50 years, and we are in the very early stages of the largest deployment of compute capacity in history. Over the last several years, we have been laser-focused on making AMD the trusted provider for the industry’s most demanding AI workloads. And we’ve done this by delivering an annual cadence of leadership data center GPUs, significantly strengthening our ROCm software stack to enable millions of models to run out of the box on AMD, and expanding our Rack Scale solutions capabilities. Today’s announcement builds on our long-standing collaboration with OpenAI that has spanned across the Instinct MI300 and MI350 series, our ROCm software stack and open-source software like Triton. On the OpenAI side, they’ve been big proponents of Triton from an open ecosystem standpoint. Triton is basically a layer that allows you to be much more hardware agnostic in how you put together the models.

Against this backdrop, I’m very happy to announce that OpenAI and AMD have signed a comprehensive multiyear multi-generation definitive agreement to deploy 6 gigawatts of AMD Instinct GPUs. AMD and OpenAI will begin deploying the first gigawatt of Instinct MI450 Series GPU capacity in the second half of 2026, making them a lead customer for both MI450 and Helios at massive scale. OpenAI has also been a key contributor to the requirements of the design of our MI450 Series GPUs and Rack Scale solutions. Under this agreement, we are deepening our strategic partnership, making AMD a core strategic compute partner to OpenAI, and powering the world’s most ambitious AI build-out to train and serve the next generation of frontier models.

To accomplish the objectives of this partnership, AMD and OpenAI will work even closer together on future road maps and technologies, spanning hardware, software, networking and system-level scalability. By choosing AMD Instinct platforms to run their most sophisticated and complex AI workloads, OpenAI is sending a clear signal that AMD GPUs and our open software stack deliver the performance and TCO required for the most demanding at scale deployments. From a revenue standpoint, revenue begins in the second half of 2026 and adds double-digit billions of annual incremental data center AI revenue once it ramps. And it also gives us clear line of sight to achieve our initial goal of tens of billions of dollars of annual data center AI revenue starting in 2027. We would expect for each gigawatt of compute, significant double-digit billions of revenue for us.”

AMD’s progress with ROCm and OpenAI’s progress with Triton is one of the main reasons why we had grown much more bullish on AMD earlier in the year, this is some of what we wrote back in May with the share price at $100:

“In the last decade, AMD has been heavily focused on their battle with Intel in the CPU market—where they did excellently by consistently taking share—however, the drawback is that they almost completely missed the opportunity in AI data center acceleration. The latter turned out to be the most lucrative semi opportunity in history, at least so far. The big competitive disadvantage for AMD is that they didn’t have their software stack ready. All AI training and inference can smoothly be implemented on Nvidia’s computing platform, CUDA, whereas AMD’s computing platform ROCm remained really a work in progress.

Obviously Lisa Su is very smart and she’s aware of this, so the focus over the last two years has been on getting ROCm ready, with a similar C++ inspired coding language so that CUDA developers can easily get up and running on ROCm. Additionally, the company has been developing tools such as HIPIFY to port CUDA code over to ROCm and thus AMD GPUs. Looking at GitHub, clearly AMD’s ecosystem is starting to gain traction. The key AI framework these days is Pytorch and if we go to their website, we can see that Pytorch can run out of the box over ROCm now.

At the same time, AMD has the budget to design silicon at TSMC’s leading nodes and could be moving to N3 even ahead of Nvidia. So, all ingredients are now getting ready for AMD to gradually start taking their share of the inference market in the coming years. Obviously, even if they can only take 10 to 20 percent from Nvidia, this provides large upside. AMD is basically a $30 billion business in annual revenues compared to Nvidia’s $200 billion. A director at Microsoft already mentioned that they will be looking to split their AI silicon between Nvidia, ASICs and AMD.

Despite the boom in AI, AMD has been a poor performer over the last two years with the share price basically where it was two years ago. However, the multi-year outlook should be looking much better at this stage. Inference should become a strong growth market and it seems likely that the company will be able to take their share of this market in the coming two-three years, with a valuation that is accommodative.”

For premium subscribers, we will dive much further into current developments in AI, with our current stock picks.